The Singapore Student Learning Space introduced a Short-Answer Feedback Assistant recently and this is my first attempt at testing it out with a simulated student account. The purpose of the Feedback Assistant is to provide auto-generated constructive feedback on short-form written responses based on a set of answers provided by the setter. The system works on natural language processing (NLP) algorithms that analyze the structure, semantics, and context of the mark-scheme and user response to understand the meaning behind the user’s response. It then generates feedback based on the analysis. I am still in the process figuring out how the mark-scheme should be written to help the AI give the most accurate response.

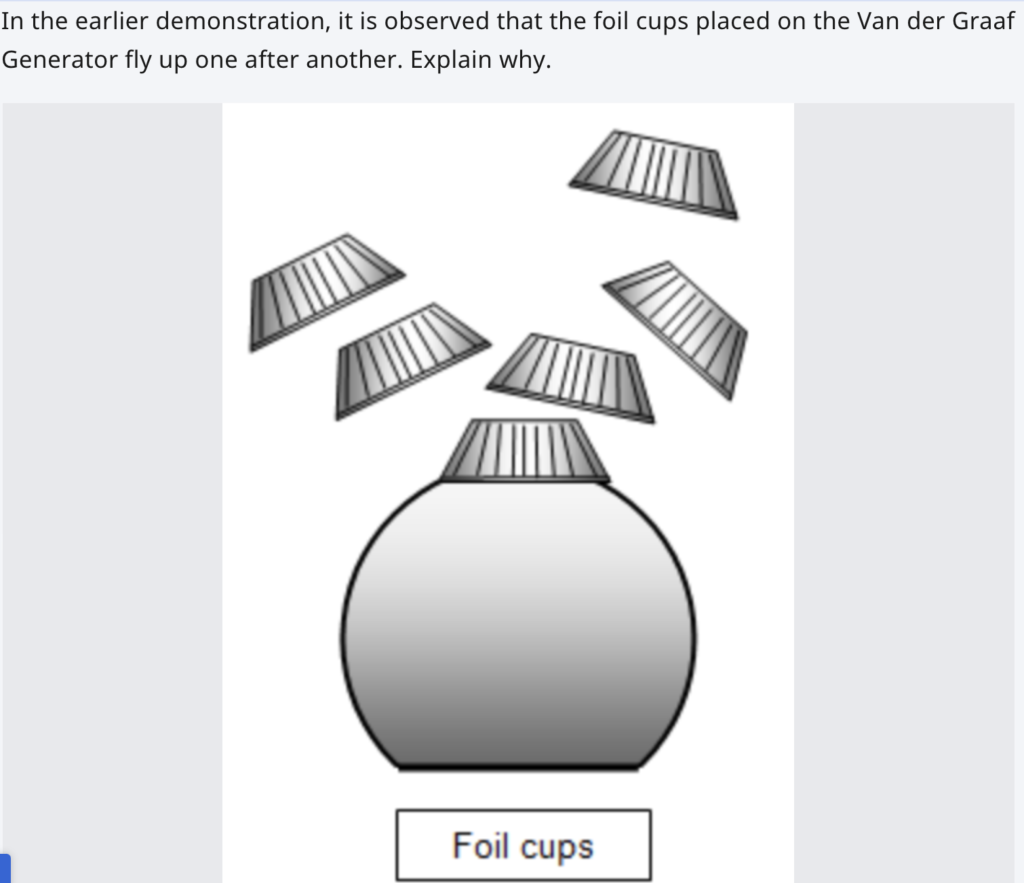

As a testing question, I used the following:

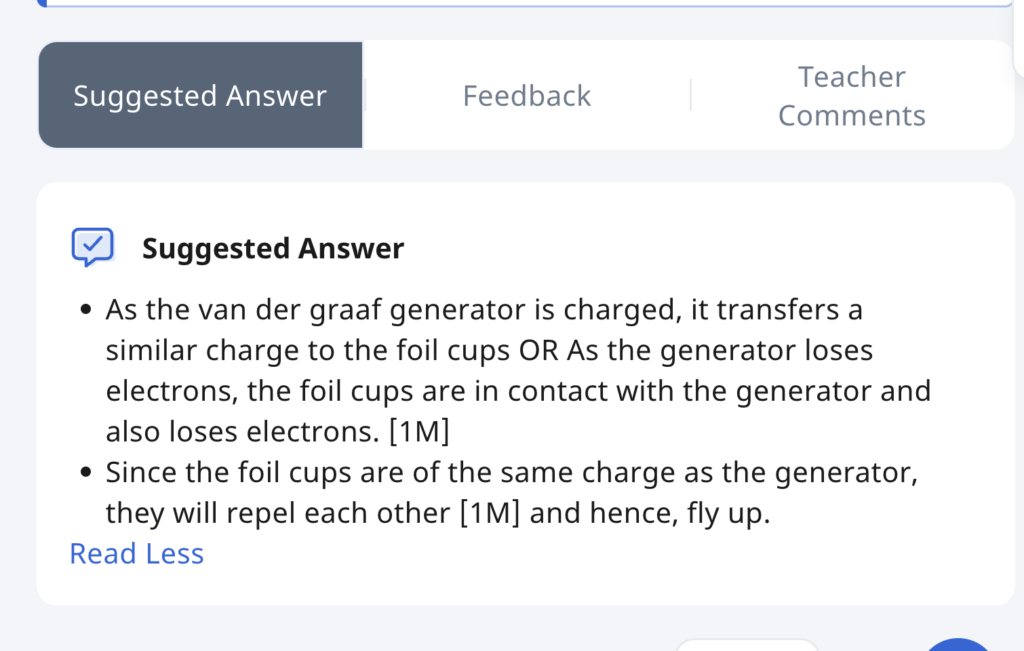

The mark scheme was written in point form for easy reading and the mark to be awarded for each point written in square brackets.

The student response was as follow:

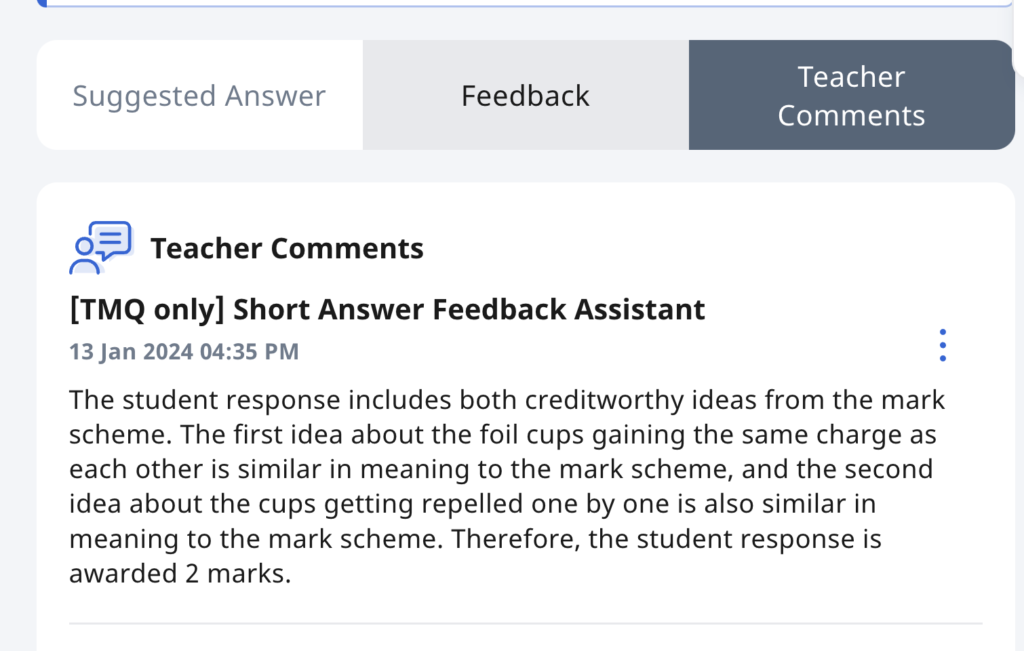

The feedback assistant then graded and proposed a feedback to the student which is found here. The NLP engine seems to be working well with the format of the mark scheme given (in point form and with marks indicated in brackets). I am still going to experiment with more questions but the results look promising for now.

However, the teacher will still likely have to keep an eye on the responses and edit it for a more accurate feedback. Unfortunately, I am not teaching for the next few months as I am on a course and will not be able to test this out with actual students but I look forward to doing so.

I also wonder if the auto-generated comments could also be trained to provide suggestions on follow-up learning activities.